Then I started thinking, can I do something similar to what OPTIMIZE operation does internally in case of Online DDL which allows concurrent DML operations. Of course, downtime cannot be an option here since this is a key table and this will mean downtime for the complete application, which is not acceptable. which implies having a longer downtime window for concurrent DML operations. So, the table copy method is used instead. There are some FULLTEXT indexes on this table. OPTIMIZE operation cannot be done using INPLACE algorithm when FULLTEXT indexes are present. During the commit phase, table metadata changes are committed. During the prepare phase, a lock is taken briefly over metadata and a new table(intermediate) is created. This operation is completed “in place” and an exclusive table lock is taken briefly, only during the prepare and commit phase of the operation. OPTIMIZE operation uses Online DDL for InnoDB tables, which reduces the downtime for concurrent DML operations. This can help us in using storage more efficiently along with providing the solution to the above problem.

In my opinion, the latter option ( OPTIMIZE) should be used here, since there are a lot of deletions on this table which could have resulted in table data to be highly fragmented. This rebuilds/defragments the table data so that data is stored more compactly, updates the indexes accordingly and then does an ANALYZE on the table.

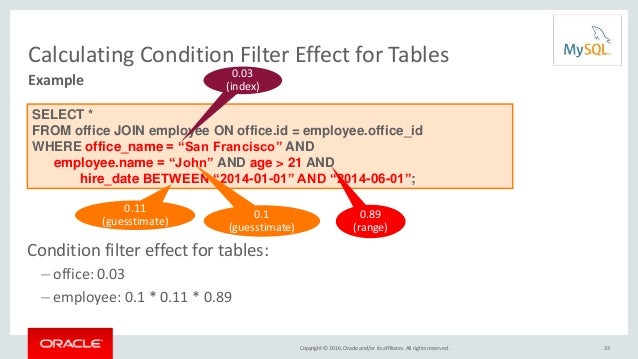

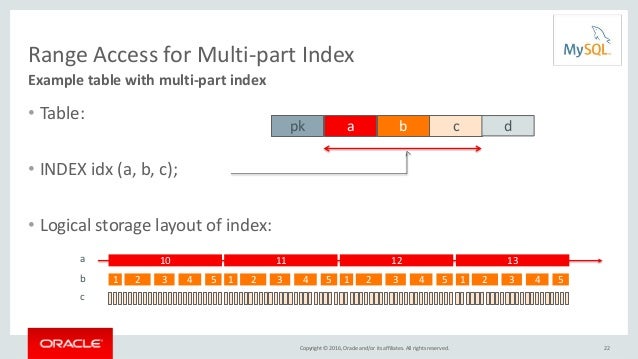

Statistics are collected using some random sample pages from new data in the table This clears the current statistics and new statistics are collected when the table is accessed next time. As per the MySQL documentation, these statistics for a table can be re-estimated explicitly by using the following methods: We found the root cause to be the Outdated Table Statistics. Table Statistics can become outdated if there are a lot of insertions and deletions on the given table (which is true in our case). So, when MySQL optimizer is not picking up the right index in EXPLAIN, it could be caused by outdated table statistics. The Root Causeīefore running any query, MySQL optimiser makes decisions about the execution plan (which can be seen via EXPLAIN), which in turn uses table statistics and conditions in clauses like WHERE, ORDER BY, etc. Then I started reading more about why this can be happening. The same query used the required index on these databases, which I got to know using EXPLAIN command.Īt this point, I was literally clueless about why this is happening and I panicked and rushed towards the coffee dispenser, my usual escape when I am stuck with some problem and had quite a few cups of coffee and regained my consciousness. But to verify, I checked the query on other databases with similar schemas (QA and dev environment) and even on replica database. Since this feature was being used extensively in production from the past few months without any issues and no change on this feature went in the last few weeks, there is no way the given query was wrong. When I dug deeper into the issue using the EXPLAIN command, I noticed that this query was not using an index which it should be using. I started wondering what happened suddenly that query performance degraded.

The table being talked about is a frequently used table, having around 300M rows approximately, with a lot of additions and deletions from the table happening on a regular basis. Pretext to understand the issue: We use MySQL 5.7(InnoDb) as our main database. I immediately checked our logs and noticed that a particular query was taking much longer than expected for a few users, and even timing out for few others. The Problem At Handįew customers have suddenly started to feel slowness and intermittent failures in a particular feature.

Mysql optimizer script software#

It was a normal day, I was working on some new feature additions in the software and suddenly an issue pops up.

0 kommentar(er)

0 kommentar(er)